Considering an on-premise deployment of Mojaloop?

Data Sovereignty and legal boundaries coupled with a lack of in-country cloud hosting options sometimes mean that an on-premise hosted solution is the best option to meet the requirements of certain jurisdictions.

The majority of Mojaloop deployments to date have been cloud-based. There is a good reason for this as cloud deployments are rapidly scalable, have a low cost of entry, and have implicit security. There is currently an effort in the Mojaloop community to enable cloud offerings to meet data sovereignty and legal boundaries by providing an Azure Stack with a private in-country cloud offering option. Despite these initiatives, due to budget constraints and or the readiness of alternative solutions, sometimes a bare metal, self-hosted on-premise deployment is the only option. There are some advantages in an on-premise deployment. On-premise deployments have improved transparency on the system limitations and associated costs, clear ownership of problems, improved visibility as every facet of the system is measurable, and low latency.

This blog takes you through the community view on how to characterize a MojaStack – the hardware you need to run that on-premise service, and some of the capacity planning considerations. This aligns with the work that David Fry, Miguel de Barros, and Steve Bristow presented at the PI20 Mojaloop Convening in Zanzibar.

On-premise performance characterization and optimizations are ongoing activities that need to be run on each major architecture change to Mojaloop, or as ‘typical workload’ patterns change. At INFITX we have the knowledge and expertise to be able to perform this function efficiently. Fill out this contact form and speak to us if you would like more information on how we can support your deployments.

What are the challenges with on-prem deployments?

On-premise deployments require all the systems to be configured and there is a lot to do. The appropriate data centers need to be selected with adequate cooling, cabling, power, and security. Adequate connectivity must be arranged that has internet adjacent, diverse routing with low latency. The availability of the system should be planned and managed by defining maintenance windows across multiple sites with a process to manage unplanned outages. Lastly, the scaling of the system should be considered and designed for. Deciding on the level of availability and performance the system should start at, and include a plan on how the system will be scaled as the business scales. Identifying the hardware with the correct levels of support, at the right price point, delivered to the correct location all while fitting within the business and return on investment plan of the hub operator.

Meeting the on-prem challenge starts with understanding availability

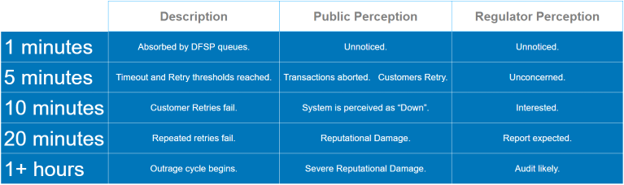

A good place to start meeting this on-prem challenge is to understand and agree to the expected availability of the system.

High-availability systems are expensive. The appropriate availability of a system for a scheme is related to the risk appetite, downtime cost of the system, and level of investment. It is important that the appropriate level of availability is agreed to and communicated. The chosen availability affects the replication model and subsequently the number of data centres required, which is why it is important that this is the starting point in the design.

Selecting a scalable hardware stack.

Next, the project working group decided to define a scalable hardware and software stack which the working group termed the MojaStack. The MojaStack solution was chosen because this maximizes the system’s ability to scale. The hardware is uniform throughout the stack, and is widely available and supported by multiple vendors. The hardware has built-in persistence eliminating the need to have specialized hardware components. MojaStack will use an open-source software stack that extends the Mojaloop Infrastructure as Code (IaC) to manage failover and resilience in an automated and repeatable fashion.

Scalability flexibility was deemed important because it is hard to know the requirements for a switch upfront as every switch has different traffic patterns, growth on a national scale hub can be unpredictable, and hardware upfront can be a huge investment. The ability to scale by adding on more hardware as availability, performance or traffic volumes grow, enables the switch to grow on demand. This has the benefit of reduced upfront hardware costs.

Sizing the cluster to optimize price-performance

Sizing and allocating nodes within a cluster is important as this defines the appropriate allocation of resources to the processes that need them, directly affecting running costs and performance. The recommended approach is to

- Validate the baseline workload assumptions

- Define patterns of transaction that constitute a ‘typical’ workload

- Define a ‘Standard’ hardware pattern

In order to optimize a system to meet a workload, all aspects of the system should be characterized. This enables building a model that can predict performance and facilitates optimization.

Characterizing the system

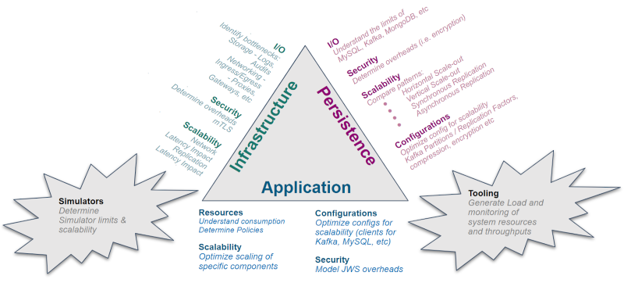

Characterizing the system involves characterization of the three pillars of the system namely the infrastructure (and security), the persistence, and the applications. Simulators and tooling is an important enabler in this exercise and may need to be characterized as a result.

Bringing this to production

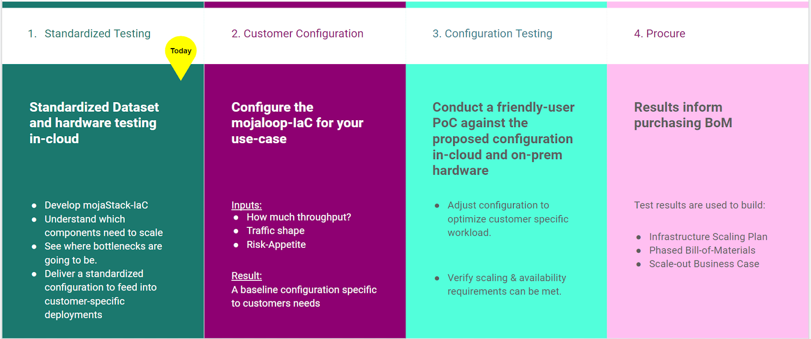

Building an on-prem production-ready Mojaloop solution involves standardized testing; customer configuration; configuration testing; followed by the system procuring and building. Standardized testing and base infrastructure configurations have been defined.

This combined with the tooling and defined structured approach means that the Mojaloop is primed for an on-premise solution.

The next step is yours. Contact INFITX if you are interested in the work being carried out on this workstream.